Notice

Recent Posts

Recent Comments

Link

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | |||

| 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| 12 | 13 | 14 | 15 | 16 | 17 | 18 |

| 19 | 20 | 21 | 22 | 23 | 24 | 25 |

| 26 | 27 | 28 | 29 | 30 | 31 |

Tags

- MySQL

- Java

- kotlin coroutine

- minikube

- Elasticsearch

- CKA

- 코틀린 코루틴의 정석

- kotlin

- kotlin querydsl

- mysql 튜닝

- AWS EKS

- 정보처리기사 실기 기출문제

- IntelliJ

- 공부

- 정보처리기사 실기

- aws

- APM

- Linux

- kotlin spring

- 정보처리기사실기 기출문제

- PETERICA

- 오블완

- Spring

- Kubernetes

- 티스토리챌린지

- CKA 기출문제

- CloudWatch

- Pinpoint

- AI

- 기록으로 실력을 쌓자

Archives

- Today

- Total

피터의 개발이야기

[CKA] Udemy 실습문제풀이 - Security 본문

반응형

ㅁ 들어가며

ㅇ Udemy, Practice, Security 공부 메모.

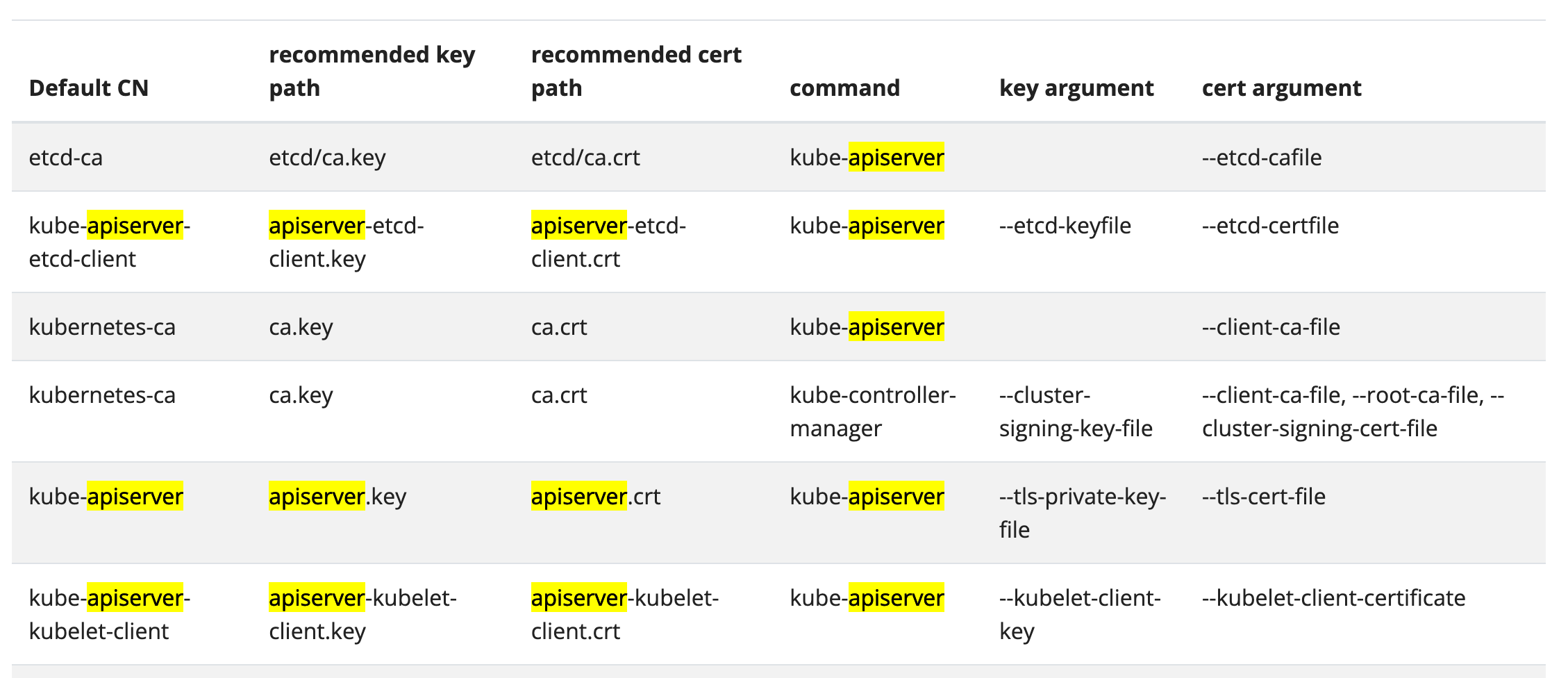

ㅁ View Certificate Details

ㅇ 인증서?

클라이언트 인증서로 인증을 사용하는 경우 easyrsa, openssl 또는 cfssl 을 통해 인증서를 수동으로 생성할 수 있다.

# kube-api server의 certificate file?

$ cat /etc/kubernetes/manifests/kube-apiserver.yaml | grep "tls-cert-file"ㄴ kube doc - certificate file + apiserver 검색

# Certificate issuer

$ openssl x509 -in /etc/kubernetes/pki/apiserver.crt -text

Certificate:

Data:

Version: 3 (0x2)

Serial Number: 2062008844972784957 (0x1c9dba1e9850fd3d)

Signature Algorithm: sha256WithRSAEncryption

Issuer: CN = kubernetes <=========

Validity

#certificate Alternative Name?

$ openssl x509 -in /etc/kubernetes/pki/apiserver.crt -text

..................

X509v3 Subject Alternative Name:

DNS:controlplane, DNS:kubernetes, DNS:kubernetes.default, DNS:kubernetes.default.svc, DNS:kubernetes.default.svc.cluster.local, IP Address:10.96.0.1, IP Address:192.31.111.3

..................

# Common Name (CN) configured on the ETCD Server certificate?

$ k -n kube-system get po etcd-controlplane -o yaml | grep etcd

ETCD Server certificate => /etc/kubernetes/pki/etcd/server.crt

$ k -n kube-system get po etcd-controlplane -o yaml | grep etcd

............................................................

kubeadm.kubernetes.io/etcd.advertise-client-urls: https://192.31.111.3:2379

component: etcd

name: etcd-controlplane

- etcd

- --cert-file=/etc/kubernetes/pki/etcd/server.crt

............................................................

$ openssl x509 -in /etc/kubernetes/pki/etcd/server.crt -text

$

ls -l /etc/kubernetes/pki/etcd/server* | grep .crt

-rw-r--r-- 1 root root 1188 May 20 00:41 /etc/kubernetes/pki/etcd/server.crt

ㅁ Certificates API

# Certificate Signing Request

$ cat akshay.csr | base64 -w 0

$ vi akshay-csr.yaml

apiVersion: certificates.k8s.io/v1

kind: CertificateSigningRequest

metadata:

name: akshay

spec:

groups:

- system:authenticated

request: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQ1ZqQ0NBVDRDQVFBd0VURVBNQTBHQTFVRUF3d0dZV3R6YUdGNU1JSUJJakFOQmdrcWhraUc5dzBCQVFFRgpBQU9DQVE4QU1JSUJDZ0tDQVFFQSsyWWlKSFBxcHFFQ2dzWlRiZjVoRHgzQjRLZHkrR1ZtdTU0SXk1Tis5OTR4CmhWRlNydVF5cGF5QUFpWXl2cGwzVUNGdHZ0a3dWRi9RajExWllaN0Y2YlVLcHpjandhS3dvMkpvTDhGbEJnTnUKdUNpNFF2czdlc0I4RGdPZE4reWFKMTBmTFJhT0tHeWFqcGw2Z21yaGJ5NC9SWVY5QzNkeGVXSHgvWU9KZkdIdQp2RXU1ME9wWHhsMmpUdTV0YkkzYk1PTmxRN0g2UVhDQ3p4QjJBUWNyZDhUbmtiejR6UVIvNWRNV1BjYkVhZnJYCkd0ajJWeExwaC82czZLVElwRFVEek5yc2F4OTU3UkV1YlFWaFJIQzVMMElVMCsrSzM5MXZCTnpPYjdDTjdRTmQKTVZuSTFYOXhPcGVrbGorY1NPZThuYnVCSTVBZGwxb0VSeFk3MmcrbnF3SURBUUFCb0FBd0RRWUpLb1pJaHZjTgpBUUVMQlFBRGdnRUJBRVdpbGtOVno4OEZvRVJ3YVVYWFl0aE8wek4zb3EyVzV5c25NaHNUZGFmZmxXcm53TkZTCjFkWW1Db2JJTHQ0OWl6NVdZbFZkOHk4NGhzVW9VQzZHelozSldlUGl3UTNuOStZQ1VaSkFIMUxnb3VVclJScFkKTU1ENGx6bzN1SHBhVStOdEt4RTcrWDJMcGdJOGhKODhkVTFxSEZEOU05OW4xWkxFK2VyTkI2UVkxbmlhbm1jZgpSWGRsZ3k0WndRemxnOVpCOVg4d0l4Zmtlbmg5Rk10RWVYVkFPc0FSNEZ1VXZjWEV3c1FZUVVhMTJva1lsQ0UrClhMb1Ewd1hIa1pTZlVWWXpjM2hwVUlSNm5wV0pHQm14M2gzc1RVeWJDSHA3c3JwekE3MlF3TWFxaDNpaW1zdDYKYm8rT1k2YmJSQmg3Ui95dDJhNy9Iekw5UytqVW9oOUhpMDA9Ci0tLS0tRU5EIENFUlRJRklDQVRFIFJFUVVFU1QtLS0tLQo=

signerName: kubernetes.io/kube-apiserver-client

usages:

- client auth

$ k apply -f akshay-csr.yaml

ㅇ Approve the CertificateSigningRequest

# Approve the CSR Request

$ kubectl certificate approve akshay

certificatesigningrequest.certificates.k8s.io/akshay approved

ㅇ Approval or rejection using kubectl

$ k certificate deny agent-smith

certificatesigningrequest.certificates.k8s.io/agent-smith denied

# csr delete

$ k delete certificatesigningrequests.certificates.k8s.io agent-smith

certificatesigningrequest.certificates.k8s.io "agent-smith" deleted

ㅁ kubeConfig

# kubeconfig 위치

/root/.kube/config

# 다른 config 파일 확인

$ kubectl config view --kubeconfig my-kube-config

# 다른 config cluster 확인

$ k config get-clusters --kubeconfig my-kube-config

# 다른 config use-context 변경

$ k config --kubeconfig /root/my-kube-config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

aws-user@kubernetes-on-aws kubernetes-on-aws aws-user

research test-cluster-1 dev-user

* test-user@development development test-user

test-user@production production test-user

$ k config --kubeconfig /root/my-kube-config use-context research

Switched to context "research".

# Default kubeconfig file change. back up and copy

# backup

$ cp config default-config

# copy

$ cp ../my-kube-config config

ㅁ Role Based Access Controls

# what is authorization mode of apiserver?

$ k -n kube-system describe po kube-apiserver-controlplane | grep auth

--authorization-mode=Node,RBAC

--enable-bootstrap-token-auth=true

# 사용자 기능 테스트

$ k get po --as=dev-user

# To create a Role

$ kubectl create role developer --namespace=default --verb=list,create,delete --resource=pods

or

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: default

name: developer

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["list", "create","delete"]

# To create a RoleBinding

$ kubectl create rolebinding dev-user-binding \

--namespace=default \

--role=developer \

--user=dev-user

or

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: dev-user-binding

subjects:

- kind: User

name: dev-user

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: Role

name: developer

apiGroup: rbac.authorization.k8s.io

# 권한 문제 분석

$ k -n blue get po dark-blue-app --as dev-user

Error from server (Forbidden): pods "dark-blue-app" is forbidden: User "dev-user" cannot get resource "pods" in API group "" in the namespace "blue"

# role 확인

$ k -n blue get role developer -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: "2024-01-26T02:21:57Z"

name: developer

namespace: blue

resourceVersion: "797"

uid: adf08226-79f5-41d0-864e-095d179f8b6f

rules:

- apiGroups:

- ""

resourceNames:

- blue-app

resources:

- pods

verbs:

- get

- watch

- create

- delete

# role 수정

k -n blue edit role developer

.......

resourceNames:

- blue-app

- dark-blue-app

.......

# 확인

$ k -n blue get po dark-blue-app --as dev-user

NAME READY STATUS RESTARTS AGE

dark-blue-app 1/1 Running 0 40m

# 권한 수정

$ kubectl edit role developer -n blue

......... 추가

- apiGroups:

- apps

resources:

- deployments

verbs:

- create

ㅁ Cluster Roles

# cluster-scoped resources (like nodes)

# clusterrole

kubectl create clusterrole node-role \

--verb=get,list,watch,create,update,patch,delete \

--resource=nodes,nodes/status

# clusterrolebinding

kubectl create clusterrolebinding node-role \

--clusterrole=node-role --user=michelle

# 확인

$ kubectl auth can-i list nodes --as michelle

Warning: resource 'nodes' is not namespace scoped

yes

**Solution

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: node-admin

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "watch", "list", "create", "delete"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: michelle-binding

subjects:

- kind: User

name: michelle

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: node-admin

apiGroup: rbac.authorization.k8s.io

# cluster-scoped resources storage-admin

# clusterrole

$ kubectl create clusterrole storage-admin \

--verb=get,list,watch,create,update,patch,delete \

--resource=persistentvolumes,storageclasses

# clusterrolebinding

$ kubectl create clusterrolebinding michelle-storage-admin \

--clusterrole=storage-admin --user=michelle

# 확인

$ kubectl auth can-i list persistentvolumes --as michelle

yes

$ kubectl auth can-i list storageclasses --as michelle

yes

ㅁ Service Accounts

# 트러블슈팅

pods is forbidden: User "system:serviceaccount:default:default" cannot list resource "pods" in API group "" in the namespace "default"

# pod Service Account는?

$ k get po web-dashboard-97c9c59f6-89gxc -o yaml | grep service

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

serviceAccount: default <========

serviceAccountName: default

- serviceAccountToken:

# pod의 ServiceAccount credentials path?

$ k describe pod web-dashboard-97c9c59f6-89gxc

...............

Containers:

web-dashboard:

Container ID: containerd://eff5ff637a2eb57c14a65aa67af51cc7e43116beaea88b0c39f498d015aa6fc4

Image: gcr.io/kodekloud/customimage/my-kubernetes-dashboard

Image ID: gcr.io/kodekloud/customimage/my-kubernetes-dashboard@sha256:7d70abe342b13ff1c4242dc83271ad73e4eedb04e2be0dd30ae7ac8852193069

Port: 8080/TCP

Host Port: 0/TCP

State: Running

Started: Fri, 26 Jan 2024 06:07:34 +0000

Ready: True

Restart Count: 0

Environment:

PYTHONUNBUFFERED: 1

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-5q7zt (ro)

...............

# Service Account create

kubectl create serviceaccount dashboard-sa

$ cat dashboard-sa-role-binding.yaml

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: read-pods

namespace: default

subjects:

- kind: ServiceAccount

name: dashboard-sa # Name is case sensitive

namespace: default

roleRef:

kind: Role #this must be Role or ClusterRole

name: pod-reader # this must match the name of the Role or ClusterRole you wish to bind to

apiGroup: rbac.authorization.k8s.io

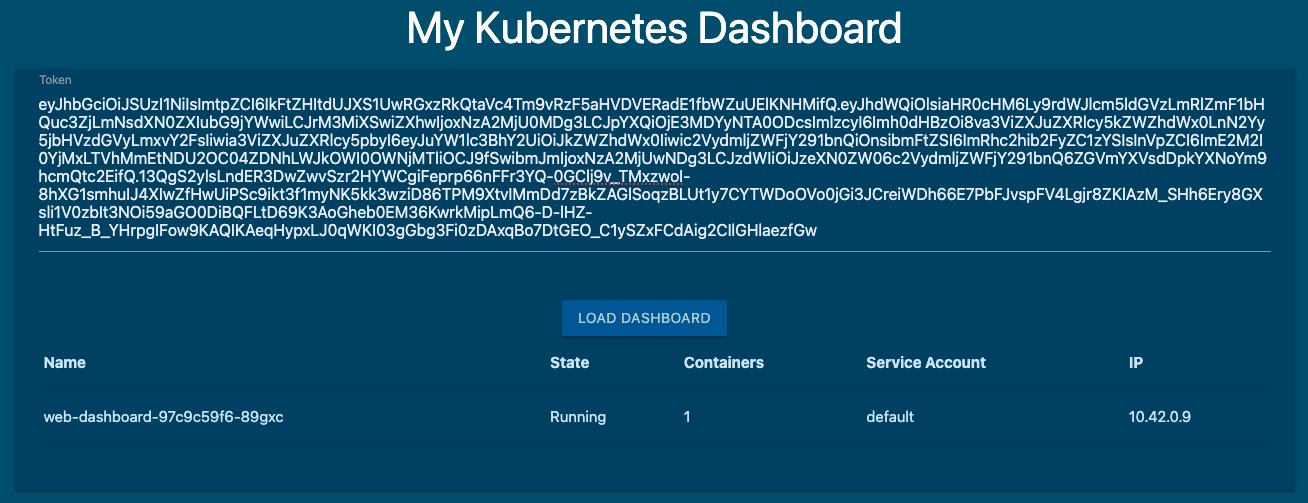

대시보드 애플리케이션의 UI에 액세스 토큰을 입력합니다. 대시보드 로드 버튼을 클릭하여 대시보드를 로드하세요.

새로 생성된 서비스 계정에 대한 인증 토큰을 만들고 생성된 토큰을 복사하여 UI의 토큰 필드에 붙여넣습니다.

이렇게 하려면 Dashboard-sa 서비스 계정에 대해 kubectl create token Dashboard-sa를 실행하고 토큰을 복사하여 UI에 붙여넣습니다.

$ kubectl create token dashboard-sa

eyJhbGciOiJSUzI1NiIsImtpZCI6IkFtZHItdUJXS1UwRGxzRkQtaVc4Tm9vRzF5aHVDVERadE1fbWZuUElKNHMifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiLCJrM3MiXSwiZXhwIjoxNzA2MjU0MDg3LCJpYXQiOjE3MDYyNTA0ODcsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJkZWZhdWx0Iiwic2VydmljZWFjY291bnQiOnsibmFtZSI6ImRhc2hib2FyZC1zYSIsInVpZCI6ImE2M2I0YjMxLTVhMmEtNDU2OC04ZDNhLWJkOWI0OWNjMTliOCJ9fSwibmJmIjoxNzA2MjUwNDg3LCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6ZGVmYXVsdDpkYXNoYm9hcmQtc2EifQ.13QgS2ylsLndER3DwZwvSzr2HYWCgiFeprp66nFFr3YQ-0GClj9v_TMxzwoI-8hXG1smhuIJ4XIwZfHwUiPSc9ikt3f1myNK5kk3wziD86TPM9XtvIMmDd7zBkZAGlSoqzBLUt1y7CYTWDoOVo0jGi3JCreiWDh66E7PbFJvspFV4Lgjr8ZKlAzM_SHh6Ery8GXsli1V0zbIt3NOi59aGO0DiBQFLtD69K3AoGheb0EM36KwrkMipLmQ6-D-lHZ-HtFuz_B_YHrpgIFow9KAQIKAeqHypxLJ0qWKI03gGbg3Fi0zDAxqBo7DtGEO_C1ySZxFCdAig2CIlGHlaezfGw

# deployment에 service account 지정

# edit

spec:

serviceAccountName: dashboard-sa <====

containers:

- image: gcr.io/kodekloud/customimage/my-kubernetes-dashboard

imagePullPolicy: Always

name: web-dashboard

# kubectl: https://kubernetes.io/docs/reference/generated/kubectl/kubectl-commands#-em-serviceaccount-em--1

$ kubectl set serviceaccount deployment web-dashboard dashboard-sa

ㅁ Image Security

# Use Image from private registry

$ k edit deployments.apps web

..............................

spec:

containers:

- image: myprivateregistry.com:5000/nginx:alpine <====

imagePullPolicy: IfNotPresent

name: nginx

..............................

# secret 생성

kubectl create secret docker-registry private-reg-cred \

--docker-server=myprivateregistry.com:5000 \

--docker-username=dock_user \

--docker-password=dock_password \

--docker-email=dock_user@myprivateregistry.com

# imagePullSecrets 시크릿을 사용하는 파드 생성하기

...........

spec:

containers:

imagePullSecrets:

- name: private-reg-cred

- image: myprivateregistry.com:5000/nginx:alpine

imagePullPolicy: IfNotPresent

name: nginx

...............

ㅁ Security Contexts

# pod 안의 프로세스 user 정보 세팅

$ k run ubuntu-sleeper --image ubuntu -o yaml --dry-run=client --command -- sleep "4800" > pod2.yaml

apiVersion: v1

kind: Pod

metadata:

name: ubuntu-sleeper

namespace: default

spec:

securityContext:

runAsUser: 1010

containers:

- command:

- sleep

- "4800"

image: ubuntu

name: ubuntu-sleeper

# securityContext가 pod와 containers가 중첩된다면, containers가 override된다.

apiVersion: v1

kind: Pod

metadata:

name: multi-pod

spec:

securityContext:

runAsUser: 1001

containers:

- image: ubuntu

name: web

command: ["sleep", "5000"]

securityContext:

runAsUser: 1002

- image: ubuntu

name: sidecar

command: ["sleep", "5000"]

# pod 띄워서 프로세스 확인

$ k exec -it multi-pod -- bash

Defaulted container "web" out of: web, sidecar

I have no name!@multi-pod:/$ ps -ef

UID PID PPID C STIME TTY TIME CMD

1002 1 0 0 08:22 ? 00:00:00 sleep 5000

1002 59 0 0 08:22 pts/0 00:00:00 bash

1002 66 59 0 08:22 pts/0 00:00:00 ps -ef

I have no name!@multi-pod:/$

ㅇ securityContext : capabilities

# kube doc sample

-----------------

apiVersion: v1

kind: Pod

metadata:

name: security-context-demo-4

spec:

containers:

- name: sec-ctx-4

image: gcr.io/google-samples/node-hello:1.0

securityContext:

capabilities:

add: ["NET_ADMIN", "SYS_TIME"]

-----------------

$ cat pod3.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: ubuntu-sleeper

name: ubuntu-sleeper

spec:

containers:

- command:

- sleep

- "4800"

image: ubuntu

name: ubuntu-sleeper

securityContext:

capabilities:

add: ["SYS_TIME"]

ㅁ Network Policies

#네트워크 정책은 어떤 포드에 적용되나요?

$ k get networkpolicies.networking.k8s.io payroll-policy -o yaml

....................

podSelector:

matchLabels:

name: payroll

....................

# 내부 애플리케이션에서 급여 서비스 및 DB 서비스로만 트래픽을 허용하는 네트워크 정책을 만듭니다.

# 아래 사양을 사용하세요. UI에서 규칙을 테스트하기 위해 포드에 대한 수신 트래픽을 활성화할 수 있습니다.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: internal-policy

namespace: default

spec:

podSelector:

matchLabels:

name: internal

policyTypes:

- Egress

- Ingress

ingress:

- {}

egress:

- to:

- podSelector:

matchLabels:

name: mysql

ports:

- protocol: TCP

port: 3306

- to:

- podSelector:

matchLabels:

name: payroll

ports:

- protocol: TCP

port: 8080

- ports:

- port: 53

protocol: UDP

- port: 53

protocol: TCP

참고: 내부 DNS 확인을 위해 TCP 및 UDP 포트에 대한 송신트래픽도 허용한다.

kube-dns 서비스는 53포트에 노출된다.

$ kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 60m

반응형

'Kubernetes > CKA&CKAD' 카테고리의 다른 글

| [CKA] Udemy 실습문제풀이 - Networking (0) | 2024.01.27 |

|---|---|

| [CKA] Udemy 실습문제풀이 - Storage (0) | 2024.01.27 |

| [CKA] Udemy 실습문제풀이 - Cluster Maintenance (0) | 2024.01.25 |

| [CKA] Udemy 실습문제풀이 - Application Lifecycle Management (0) | 2024.01.25 |

| [CKA] 기출문제 - ETCD Backup and Restore (0) | 2023.12.25 |

Comments