| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

- 티스토리챌린지

- kotlin

- kotlin coroutine

- AI

- PETERICA

- aws

- Java

- minikube

- golang

- Pinpoint

- CKA

- Rag

- Spring

- LLM

- 오블완

- MySQL

- 공부

- Linux

- 바이브코딩

- CloudWatch

- CKA 기출문제

- AWS EKS

- 코틀린 코루틴의 정석

- go

- Kubernetes

- SRE

- APM

- 정보처리기사 실기 기출문제

- tucker의 go 언어 프로그래밍

- 기록으로 실력을 쌓자

- Today

- Total

피터의 개발이야기

[Elasticsearch] EFK 설치(minikube)-1 본문

ㅁ 관련 글

ㅇ [Elasticsearch] EFK(Elasticsearch, Fluentd, kibana)란

ㅇ [Elasticsearch] EFK 설치(minikube)-1

ㅇ [Elasticsearch] EFK 설치(minikube)-2

ㅁ 개요

ㅇ 지난 글, [Elasticsearch] EFK(Elasticsearch, Fluentd, kibana)란에서 EFK란 무엇인지를 알아보았다.

ㅇ 실습을 위해 EFK를 minikube 환경에 설치하는 과정을 1, 2부로 나뉘어 정리하였다.

ㅇ 관련 소스는 여기

ㅁ minikube 가동

$ minikube start --cpus 4 --memory 8192

😄 Darwin 11.2 의 minikube v1.25.2

🆕 이제 1.23.3 버전의 쿠버네티스를 사용할 수 있습니다. 업그레이드를 원하신다면 다음과 같이 지정하세요: --kubernetes-version=v1.23.3

✨ 기존 프로필에 기반하여 hyperkit 드라이버를 사용하는 중

❗ You cannot change the memory size for an existing minikube cluster. Please first delete the cluster.

❗ You cannot change the CPUs for an existing minikube cluster. Please first delete the cluster.

👍 minikube 클러스터의 minikube 컨트롤 플레인 노드를 시작하는 중

🔄 Restarting existing hyperkit VM for "minikube" ...

🎉 minikube 1.26.1 이 사용가능합니다! 다음 경로에서 다운받으세요: https://github.com/kubernetes/minikube/releases/tag/v1.26.1

💡 To disable this notice, run: 'minikube config set WantUpdateNotification false'

🐳 쿠버네티스 v1.23.1 을 Docker 20.10.12 런타임으로 설치하는 중

▪ kubelet.housekeeping-interval=5m

🔎 Kubernetes 구성 요소를 확인...

▪ Using image kubernetesui/metrics-scraper:v1.0.7

▪ Using image kubernetesui/dashboard:v2.3.1

▪ Using image gcr.io/k8s-minikube/storage-provisioner:v5

🌟 애드온 활성화 : storage-provisioner, default-storageclass, dashboard

🏄 끝났습니다! kubectl이 "minikube" 클러스터와 "default" 네임스페이스를 기본적으로 사용하도록 구성되었습니다.

$ kubectl get node ✔ 6158 10:14:27

NAME STATUS ROLES AGE VERSION

minikube Ready control-plane,master 40d v1.23.1

ㅇ cpu 4, memory 8GB로 minikube를 가동하였다.

ㅁ Flask-hello app 배포

run.py

from flask import Flask

from flask import request, jsonify

app = Flask(__name__)

@app.route("/")

def hello_world():

return "<p>Hello, World!</p>"

if __name__=='__main__':

app.run(host="0.0.0.0", port=8080)ㅇ 테스트를 위한 샘플 앱은 이 글에서 만들어서 Docker Hub에 배포가 되어 있는 상황이다.

ㅇ 이 flask app은 hello world를 표출하도록 되어 있는 간단한 앱이다.

FlaskApp.yaml

apiVersion: v1

kind: Service

metadata:

name: flask-hello

namespace: hello

spec:

selector:

app: flask-hello

ports:

- name: http

protocol: TCP

port: 8090

targetPort: 8080

type: LoadBalancer

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: flask-hello

namespace: hello

spec:

selector:

matchLabels:

app: flask-hello

replicas: 2

template:

metadata:

labels:

app: flask-hello

spec:

containers:

- name: flask-hello

image: ilovefran/flask-hello

ports:

- containerPort: 8080ㅇ Flask 앱 배포를 위한 yaml이다.

ㅇ 컨테이너 2대를 띄우기 위해 replicas 2로 설정한 Deploymnet

ㅇ 외부 접속을 위해 LoadBalancer 타입의 Service

ㅇ namespace는 hello로 함.

ㅁ Hello Namespace 생성

# namespace 생성

$ kubectl create namespace hello

namespace/hello created

# namespace 확인

$ kubectl get namespaces

NAME STATUS AGE

default Active 41d

hello Active 9s

kube-node-lease Active 41d

kube-public Active 41d

kube-system Active 41d

kubernetes-dashboard Active 41d

ㅇ 'hello' namespace를 생성한다.

ㅇ 나중에 namespace를 지우면 테스트 사항들을 깔름하게 지울 수 있다.

ㅁ kubectl apply 및 배포 확인

# kubectl 앱 배포

$ kubectl apply -f flaskApp.yaml

service/flask-hello created

deployment.apps/flask-hello created

# service 리스트 확인

$ minikube service list

|----------------------|----------------------------------------------------|--------------|---------------------------|

| NAMESPACE | NAME | TARGET PORT | URL |

|----------------------|----------------------------------------------------|--------------|---------------------------|

| default | alertmanager-operated | No node port |

| default | kubernetes | No node port |

| default | prometheus-grafana | http-web/80 | http://192.168.64.2:30700 |

| default | prometheus-kube-prometheus-alertmanager | No node port |

| default | prometheus-kube-prometheus-operator | No node port |

| default | prometheus-kube-prometheus-prometheus | No node port |

| default | prometheus-kube-state-metrics | No node port |

| default | prometheus-operated | No node port |

| default | prometheus-prometheus-node-exporter | No node port |

| hello | flask-hello | http/8090 | http://192.168.64.2:31600 |

| kube-system | kube-dns | No node port |

| kube-system | prometheus-kube-prometheus-coredns | No node port |

| kube-system | prometheus-kube-prometheus-kube-controller-manager | No node port |

| kube-system | prometheus-kube-prometheus-kube-etcd | No node port |

| kube-system | prometheus-kube-prometheus-kube-proxy | No node port |

| kube-system | prometheus-kube-prometheus-kube-scheduler | No node port |

| kube-system | prometheus-kube-prometheus-kubelet | No node port |

| kubernetes-dashboard | dashboard-metrics-scraper | No node port |

| kubernetes-dashboard | kubernetes-dashboard | No node port |

|----------------------|----------------------------------------------------|--------------|---------------------------|

ㅇ flaskApp.yaml을 기준으로 service와 deployment가 생성되었다.

ㅇ LoadBalancer Service의 경우 minikube service list에서 접속 정보를 확인할 수 있다.

|

ㅇ Hello, World! 라는 메시지를 확인하였다.

ㅇ kubectl get all -n hello 명령어를 통해 두개의 pod, service를 확인할 수 있다.

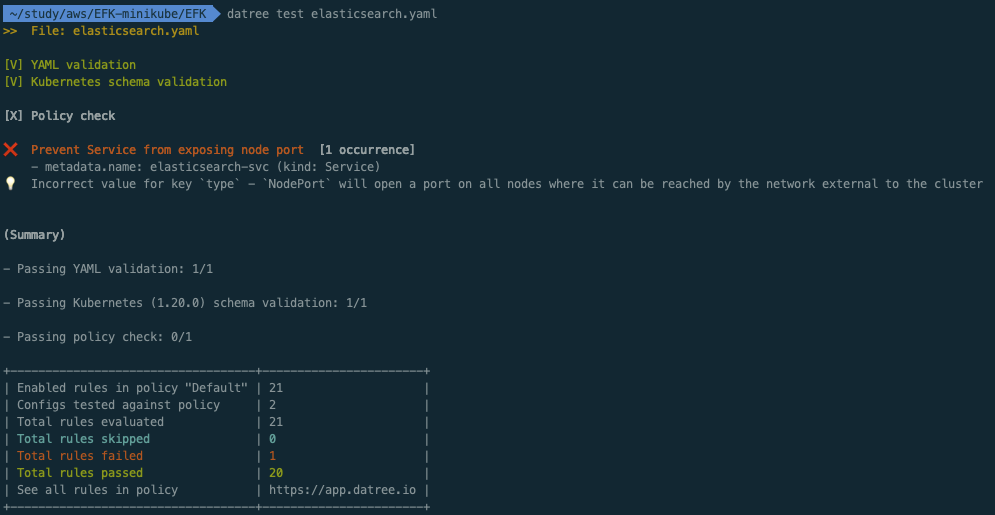

ㅁ Datree를 이용한 Elasticsearch.yaml 검증작업

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: elasticsearch

namespace: elastic

labels:

app: elasticsearch

spec:

serviceName: elasticsearch

replicas: 1

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

containers:

- name: elasticsearch

image: elastic/elasticsearch:7.2.0

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

env:

- name: discovery.type

value: single-node

ports:

- containerPort: 9200

protocol: TCP

- containerPort: 9300

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

labels:

app: elasticsearch

name: elasticsearch-svc

namespace: elastic

spec:

ports:

- name: elasticsearch-rest

nodePort: 30920

port: 9200

protocol: TCP

targetPort: 9200

- name: elasticsearch-nodecom

nodePort: 30930

port: 9300

protocol: TCP

targetPort: 9300

selector:

app: elasticsearch

type: NodePortㅇ yaml에 대한 사전 점검을 위해 Datree를 활용해 보자.

ㅇ Datree에 관련된 글은 여기서 확인.

ㅇ 기존에는 aws에서 작업을 하였기 때문에 Mac에서도 설치를 하였다.

ㅇ yaml validation 통과

ㅇ kubernetes schema validation도 통과

ㅇ limit.memory, requests.memory에 대해서 경고가 확인이 되어 200Mi로 제한을 두고,

livenessProbe, readinessProbe 추가하였다.

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: elasticsearch

namespace: elastic

labels:

app: elasticsearch

spec:

serviceName: elasticsearch

replicas: 1

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

containers:

- name: elasticsearch

image: elastic/elasticsearch:7.2.0

resources:

limits:

cpu: 1000m

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

env:

- name: discovery.type

value: single-node

ports:

- containerPort: 9200

protocol: TCP

- containerPort: 9300

protocol: TCP

livenessProbe:

tcpSocket:

port: transport

initialDelaySeconds: 20

periodSeconds: 10

readinessProbe:

httpGet:

path: /_cluster/health

port: http

initialDelaySeconds: 20

timeoutSeconds: 5

---

apiVersion: v1

kind: Service

metadata:

labels:

app: elasticsearch

name: elasticsearch-svc

namespace: elastic

spec:

ports:

- name: elasticsearch-rest

nodePort: 30920

port: 9200

protocol: TCP

targetPort: 9200

- name: elasticsearch-nodecom

nodePort: 30930

port: 9300

protocol: TCP

targetPort: 9300

selector:

app: elasticsearch

type: NodePort

ㅇ 기존에 발생하였던 경고는 없어졌다. NodePort는 내부에서만 주로 쓰기 때문에 무시한다.

ㅁ Elasticsearch 배포

# namespace 생성

$ kubectl create namespace elastic

namespace/elastic created

# 배포

$ kubectl apply -f elasticsearch.yaml ✔ 6328 00:38:14

statefulset.apps/elasticsearch created

service/elasticsearch-svc created

ㅇ 테스트 뒷정리를 위해 elastic namespace를 생성한다.

ㅇ statefulset과 service가 생성되었다.

ㅁ Elasticsearch 배포 확인

$ kubectl get all -n elastic ✔ 6334 00:43:15

NAME READY STATUS RESTARTS AGE

pod/elasticsearch-0 0/1 CrashLoopBackOff 5 (71s ago) 5m7s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/elasticsearch-svc NodePort 10.103.53.159 <none> 9200:30920/TCP,9300:30930/TCP 5m7s

NAME READY AGE

statefulset.apps/elasticsearch 0/1 5m7s

ㅇ CrashLoopBackOff가 발생하였다.

ㅇ 상세 확인이 필요하다.

$ kubectl describe pod -n elastic elasticsearch-0

Name: elasticsearch-0

Namespace: elastic

Priority: 0

Node: minikube/192.168.64.2

Start Time: Mon, 08 Aug 2022 00:38:19 +0900

Labels: app=elasticsearch

controller-revision-hash=elasticsearch-86b8c6bcd6

statefulset.kubernetes.io/pod-name=elasticsearch-0

Annotations: <none>

Status: Running

IP: 172.17.0.10

IPs:

IP: 172.17.0.10

Controlled By: StatefulSet/elasticsearch

Containers:

elasticsearch:

Container ID: docker://6c313541cb0ec499598b9fdf8d7a064672319e92536b9f7523c26e0041b8206a

Image: elastic/elasticsearch:7.2.0

Image ID: docker-pullable://elastic/elasticsearch@sha256:25859ce3c9a4ac9b669411d2aa09d436ba34d39c23f0e3b6b058d8a7a0a8d36a

Ports: 9200/TCP, 9300/TCP

Host Ports: 0/TCP, 0/TCP

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: OOMKilled

Exit Code: 1

Started: Mon, 08 Aug 2022 00:42:14 +0900

Finished: Mon, 08 Aug 2022 00:42:15 +0900

Ready: False

Restart Count: 5

Limits:

cpu: 1

memory: 200Mi

Requests:

cpu: 100m

memory: 200Mi

Liveness: tcp-socket :transport delay=20s timeout=1s period=10s #success=1 #failure=3

Readiness: http-get http://:http/_cluster/health delay=20s timeout=5s period=10s #success=1 #failure=3

Environment:

discovery.type: single-node

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-qnc9v (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

kube-api-access-qnc9v:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 5m12s default-scheduler Successfully assigned elastic/elasticsearch-0 to minikube

Normal Pulling 5m12s kubelet Pulling image "elastic/elasticsearch:7.2.0"

Normal Pulled 4m13s kubelet Successfully pulled image "elastic/elasticsearch:7.2.0" in 58.461465888s

Normal Created 3m27s (x4 over 4m13s) kubelet Created container elasticsearch

Normal Started 3m27s (x4 over 4m13s) kubelet Started container elasticsearch

Normal Pulled 3m27s (x3 over 4m12s) kubelet Container image "elastic/elasticsearch:7.2.0" already present on machine

Warning BackOff 7s (x28 over 4m10s) kubelet Back-off restarting failed containerㅇ Docker 이미지는 잘 가져왔고, container도 정상적으로 생성하여 시작되었다.

ㅇ Back-off가 발생하여 재시작 하는 이벤트가 확인되어 log를 확인해 보도록 한다.

$ kubectl logs -n elastic pods/elasticsearch-0

Exception in thread "main" java.lang.RuntimeException: starting java failed with [137]

output:

error:

OpenJDK 64-Bit Server VM warning: Option UseConcMarkSweepGC was deprecated in version 9.0 and will likely be removed in a future release.

at org.elasticsearch.tools.launchers.JvmErgonomics.flagsFinal(JvmErgonomics.java:111)

at org.elasticsearch.tools.launchers.JvmErgonomics.finalJvmOptions(JvmErgonomics.java:79)

at org.elasticsearch.tools.launchers.JvmErgonomics.choose(JvmErgonomics.java:57)

at org.elasticsearch.tools.launchers.JvmOptionsParser.main(JvmOptionsParser.java:89)

ㅁ 트라블슈팅

ㅇ minikube환경인지는 정확하지 않다.

ㅇ elasticsearch 이슈 #45170에 따라

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"추가 하였다.

ㅇ readinessProbe가 잘못된 경우 컨테이너의 로그상으로는 정상 상태였고,

pod의 status가 Running이지만 Ready가 되지 않았다.

ㅇ readinessProbe가 잘못되어 일단 주석처리하여 현재 정상 가동이 되었다.

$ kubectl logs -n elastic statefulsets/elasticsearch ✔ 6417 01:37:06

OpenJDK 64-Bit Server VM warning: Option UseConcMarkSweepGC was deprecated in version 9.0 and will likely be removed in a future release.

{"type": "server", "timestamp": "2022-08-07T16:36:51,453+0000", "level": "INFO", "component": "o.e.e.NodeEnvironment", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "using [1] data paths, mounts [[/ (overlay)]], net usable_space [9gb], net total_space [16.9gb], types [overlay]" }

{"type": "server", "timestamp": "2022-08-07T16:36:51,456+0000", "level": "INFO", "component": "o.e.e.NodeEnvironment", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "heap size [503.6mb], compressed ordinary object pointers [true]" }

{"type": "server", "timestamp": "2022-08-07T16:36:51,461+0000", "level": "INFO", "component": "o.e.n.Node", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "node name [elasticsearch-0], node ID [v1QsbFFSQ-mUflFRqF6yow], cluster name [docker-cluster]" }

{"type": "server", "timestamp": "2022-08-07T16:36:51,508+0000", "level": "INFO", "component": "o.e.n.Node", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "version[7.2.0], pid[1], build[default/docker/508c38a/2019-06-20T15:54:18.811730Z], OS[Linux/4.19.202/amd64], JVM[Oracle Corporation/OpenJDK 64-Bit Server VM/12.0.1/12.0.1+12]" }

{"type": "server", "timestamp": "2022-08-07T16:36:51,509+0000", "level": "INFO", "component": "o.e.n.Node", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "JVM home [/usr/share/elasticsearch/jdk]" }

{"type": "server", "timestamp": "2022-08-07T16:36:51,509+0000", "level": "INFO", "component": "o.e.n.Node", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "JVM arguments [-Xms1g, -Xmx1g, -XX:+UseConcMarkSweepGC, -XX:CMSInitiatingOccupancyFraction=75, -XX:+UseCMSInitiatingOccupancyOnly, -Des.networkaddress.cache.ttl=60, -Des.networkaddress.cache.negative.ttl=10, -XX:+AlwaysPreTouch, -Xss1m, -Djava.awt.headless=true, -Dfile.encoding=UTF-8, -Djna.nosys=true, -XX:-OmitStackTraceInFastThrow, -Dio.netty.noUnsafe=true, -Dio.netty.noKeySetOptimization=true, -Dio.netty.recycler.maxCapacityPerThread=0, -Dlog4j.shutdownHookEnabled=false, -Dlog4j2.disable.jmx=true, -Djava.io.tmpdir=/tmp/elasticsearch-13435502895208845015, -XX:+HeapDumpOnOutOfMemoryError, -XX:HeapDumpPath=data, -XX:ErrorFile=logs/hs_err_pid%p.log, -Xlog:gc*,gc+age=trace,safepoint:file=logs/gc.log:utctime,pid,tags:filecount=32,filesize=64m, -Djava.locale.providers=COMPAT, -Des.cgroups.hierarchy.override=/, -Xms512m, -Xmx512m, -Dio.netty.allocator.type=unpooled, -XX:MaxDirectMemorySize=268435456, -Des.path.home=/usr/share/elasticsearch, -Des.path.conf=/usr/share/elasticsearch/config, -Des.distribution.flavor=default, -Des.distribution.type=docker, -Des.bundled_jdk=true]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,254+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [aggs-matrix-stats]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,254+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [analysis-common]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,254+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [data-frame]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,255+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [ingest-common]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,255+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [ingest-geoip]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,256+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [ingest-user-agent]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,256+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [lang-expression]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,307+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [lang-mustache]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,310+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [lang-painless]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,310+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [mapper-extras]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,311+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [parent-join]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,311+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [percolator]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,312+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [rank-eval]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,312+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [reindex]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,313+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [repository-url]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,314+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [transport-netty4]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,318+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [x-pack-ccr]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,318+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [x-pack-core]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,318+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [x-pack-deprecation]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,318+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [x-pack-graph]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,320+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [x-pack-ilm]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,321+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [x-pack-logstash]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,321+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [x-pack-ml]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,322+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [x-pack-monitoring]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,323+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [x-pack-rollup]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,323+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [x-pack-security]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,323+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [x-pack-sql]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,324+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "loaded module [x-pack-watcher]" }

{"type": "server", "timestamp": "2022-08-07T16:36:55,324+0000", "level": "INFO", "component": "o.e.p.PluginsService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "no plugins loaded" }

{"type": "server", "timestamp": "2022-08-07T16:37:04,660+0000", "level": "INFO", "component": "o.e.x.s.a.s.FileRolesStore", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "parsed [0] roles from file [/usr/share/elasticsearch/config/roles.yml]" }

{"type": "server", "timestamp": "2022-08-07T16:37:06,233+0000", "level": "INFO", "component": "o.e.x.m.p.l.CppLogMessageHandler", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "[controller/87] [Main.cc@110] controller (64 bit): Version 7.2.0 (Build 65aefcbfce449b) Copyright (c) 2019 Elasticsearch BV" }

{"type": "server", "timestamp": "2022-08-07T16:37:07,125+0000", "level": "DEBUG", "component": "o.e.a.ActionModule", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "Using REST wrapper from plugin org.elasticsearch.xpack.security.Security" }

{"type": "server", "timestamp": "2022-08-07T16:37:07,756+0000", "level": "INFO", "component": "o.e.d.DiscoveryModule", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "using discovery type [single-node] and seed hosts providers [settings]" }

{"type": "server", "timestamp": "2022-08-07T16:37:09,448+0000", "level": "INFO", "component": "o.e.n.Node", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "initialized" }

{"type": "server", "timestamp": "2022-08-07T16:37:09,449+0000", "level": "INFO", "component": "o.e.n.Node", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "starting ..." }

{"type": "server", "timestamp": "2022-08-07T16:37:09,748+0000", "level": "INFO", "component": "o.e.t.TransportService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "publish_address {172.17.0.10:9300}, bound_addresses {0.0.0.0:9300}" }

{"type": "server", "timestamp": "2022-08-07T16:37:09,816+0000", "level": "WARN", "component": "o.e.b.BootstrapChecks", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]" }

{"type": "server", "timestamp": "2022-08-07T16:37:09,842+0000", "level": "INFO", "component": "o.e.c.c.Coordinator", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "setting initial configuration to VotingConfiguration{v1QsbFFSQ-mUflFRqF6yow}" }

{"type": "server", "timestamp": "2022-08-07T16:37:10,134+0000", "level": "INFO", "component": "o.e.c.s.MasterService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "elected-as-master ([1] nodes joined)[{elasticsearch-0}{v1QsbFFSQ-mUflFRqF6yow}{Myle3-pZT12szdtlfa-JWw}{172.17.0.10}{172.17.0.10:9300}{ml.machine_memory=838860800, xpack.installed=true, ml.max_open_jobs=20} elect leader, _BECOME_MASTER_TASK_, _FINISH_ELECTION_], term: 1, version: 1, reason: master node changed {previous [], current [{elasticsearch-0}{v1QsbFFSQ-mUflFRqF6yow}{Myle3-pZT12szdtlfa-JWw}{172.17.0.10}{172.17.0.10:9300}{ml.machine_memory=838860800, xpack.installed=true, ml.max_open_jobs=20}]}" }

{"type": "server", "timestamp": "2022-08-07T16:37:10,206+0000", "level": "INFO", "component": "o.e.c.c.CoordinationState", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "cluster UUID set to [puvYGgbfR_-FUa90MuW8-A]" }

{"type": "server", "timestamp": "2022-08-07T16:37:10,229+0000", "level": "INFO", "component": "o.e.c.s.ClusterApplierService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "message": "master node changed {previous [], current [{elasticsearch-0}{v1QsbFFSQ-mUflFRqF6yow}{Myle3-pZT12szdtlfa-JWw}{172.17.0.10}{172.17.0.10:9300}{ml.machine_memory=838860800, xpack.installed=true, ml.max_open_jobs=20}]}, term: 1, version: 1, reason: Publication{term=1, version=1}" }

{"type": "server", "timestamp": "2022-08-07T16:37:10,336+0000", "level": "INFO", "component": "o.e.h.AbstractHttpServerTransport", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "cluster.uuid": "puvYGgbfR_-FUa90MuW8-A", "node.id": "v1QsbFFSQ-mUflFRqF6yow", "message": "publish_address {172.17.0.10:9200}, bound_addresses {0.0.0.0:9200}" }

{"type": "server", "timestamp": "2022-08-07T16:37:10,336+0000", "level": "INFO", "component": "o.e.n.Node", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "cluster.uuid": "puvYGgbfR_-FUa90MuW8-A", "node.id": "v1QsbFFSQ-mUflFRqF6yow", "message": "started" }

{"type": "server", "timestamp": "2022-08-07T16:37:10,554+0000", "level": "INFO", "component": "o.e.g.GatewayService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "cluster.uuid": "puvYGgbfR_-FUa90MuW8-A", "node.id": "v1QsbFFSQ-mUflFRqF6yow", "message": "recovered [0] indices into cluster_state" }

{"type": "server", "timestamp": "2022-08-07T16:37:10,952+0000", "level": "INFO", "component": "o.e.c.m.MetaDataIndexTemplateService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "cluster.uuid": "puvYGgbfR_-FUa90MuW8-A", "node.id": "v1QsbFFSQ-mUflFRqF6yow", "message": "adding template [.triggered_watches] for index patterns [.triggered_watches*]" }

{"type": "server", "timestamp": "2022-08-07T16:37:11,222+0000", "level": "INFO", "component": "o.e.c.m.MetaDataIndexTemplateService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "cluster.uuid": "puvYGgbfR_-FUa90MuW8-A", "node.id": "v1QsbFFSQ-mUflFRqF6yow", "message": "adding template [.watch-history-9] for index patterns [.watcher-history-9*]" }

{"type": "server", "timestamp": "2022-08-07T16:37:11,262+0000", "level": "INFO", "component": "o.e.c.m.MetaDataIndexTemplateService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "cluster.uuid": "puvYGgbfR_-FUa90MuW8-A", "node.id": "v1QsbFFSQ-mUflFRqF6yow", "message": "adding template [.watches] for index patterns [.watches*]" }

{"type": "server", "timestamp": "2022-08-07T16:37:11,349+0000", "level": "INFO", "component": "o.e.c.m.MetaDataIndexTemplateService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "cluster.uuid": "puvYGgbfR_-FUa90MuW8-A", "node.id": "v1QsbFFSQ-mUflFRqF6yow", "message": "adding template [.monitoring-logstash] for index patterns [.monitoring-logstash-7-*]" }

{"type": "server", "timestamp": "2022-08-07T16:37:11,529+0000", "level": "INFO", "component": "o.e.c.m.MetaDataIndexTemplateService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "cluster.uuid": "puvYGgbfR_-FUa90MuW8-A", "node.id": "v1QsbFFSQ-mUflFRqF6yow", "message": "adding template [.monitoring-es] for index patterns [.monitoring-es-7-*]" }

{"type": "server", "timestamp": "2022-08-07T16:37:11,636+0000", "level": "INFO", "component": "o.e.c.m.MetaDataIndexTemplateService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "cluster.uuid": "puvYGgbfR_-FUa90MuW8-A", "node.id": "v1QsbFFSQ-mUflFRqF6yow", "message": "adding template [.monitoring-beats] for index patterns [.monitoring-beats-7-*]" }

{"type": "server", "timestamp": "2022-08-07T16:37:11,721+0000", "level": "INFO", "component": "o.e.c.m.MetaDataIndexTemplateService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "cluster.uuid": "puvYGgbfR_-FUa90MuW8-A", "node.id": "v1QsbFFSQ-mUflFRqF6yow", "message": "adding template [.monitoring-alerts-7] for index patterns [.monitoring-alerts-7]" }

{"type": "server", "timestamp": "2022-08-07T16:37:11,807+0000", "level": "INFO", "component": "o.e.c.m.MetaDataIndexTemplateService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "cluster.uuid": "puvYGgbfR_-FUa90MuW8-A", "node.id": "v1QsbFFSQ-mUflFRqF6yow", "message": "adding template [.monitoring-kibana] for index patterns [.monitoring-kibana-7-*]" }

{"type": "server", "timestamp": "2022-08-07T16:37:11,839+0000", "level": "INFO", "component": "o.e.x.i.a.TransportPutLifecycleAction", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "cluster.uuid": "puvYGgbfR_-FUa90MuW8-A", "node.id": "v1QsbFFSQ-mUflFRqF6yow", "message": "adding index lifecycle policy [watch-history-ilm-policy]" }

{"type": "server", "timestamp": "2022-08-07T16:37:12,059+0000", "level": "INFO", "component": "o.e.l.LicenseService", "cluster.name": "docker-cluster", "node.name": "elasticsearch-0", "cluster.uuid": "puvYGgbfR_-FUa90MuW8-A", "node.id": "v1QsbFFSQ-mUflFRqF6yow", "message": "license [310f9556-586f-4d69-b6bc-a60323515cf3] mode [basic] - valid" }ㅇ 정상 작동 로그이다.

ㅁ Elasticsearch 접속 확인

{

"name" : "elasticsearch-0",

"cluster_name" : "docker-cluster",

"cluster_uuid" : "peBeG9eTR-yvYRftDKMGRQ",

"version" : {

"number" : "7.2.0",

"build_flavor" : "default",

"build_type" : "docker",

"build_hash" : "508c38a",

"build_date" : "2019-06-20T15:54:18.811730Z",

"build_snapshot" : false,

"lucene_version" : "8.0.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

ㅁ 함께 보면 좋은 사이트(23.2.5)

Elasticsearch에 fluentd를 얹은 EFK stack 구축하기(with kubernetes)

EFK stack이란 Elasticsearch, Fluentd, Kibana를 얹은 스택을 말합니다. 기존의 ELK stack과는 로그 파이프라인 역할을 하던 Logstash를 Fluentd로 대체했다는 차이점이 있습니다. 그럼 Fluentd를 사용한 EFK stack은 EL

nangman14.tistory.com

'DevOps > Elasticsearch' 카테고리의 다른 글

| [Elasticsearch] Elasticsearch rejected exception 분석 (0) | 2022.11.16 |

|---|---|

| [Elasticsearch] EFK 설치(minikube)-2 (2) | 2022.08.15 |

| [Elasticsearch] EFK(Elasticsearch, Fluentd, kibana)란 (0) | 2022.08.02 |

| [Elasticsearch] Elasticsearch DISK IO 병목현상 및 재기동 (0) | 2022.05.17 |

| [Elasticsearch] index vs indices (0) | 2022.05.17 |